LoRA-Dash

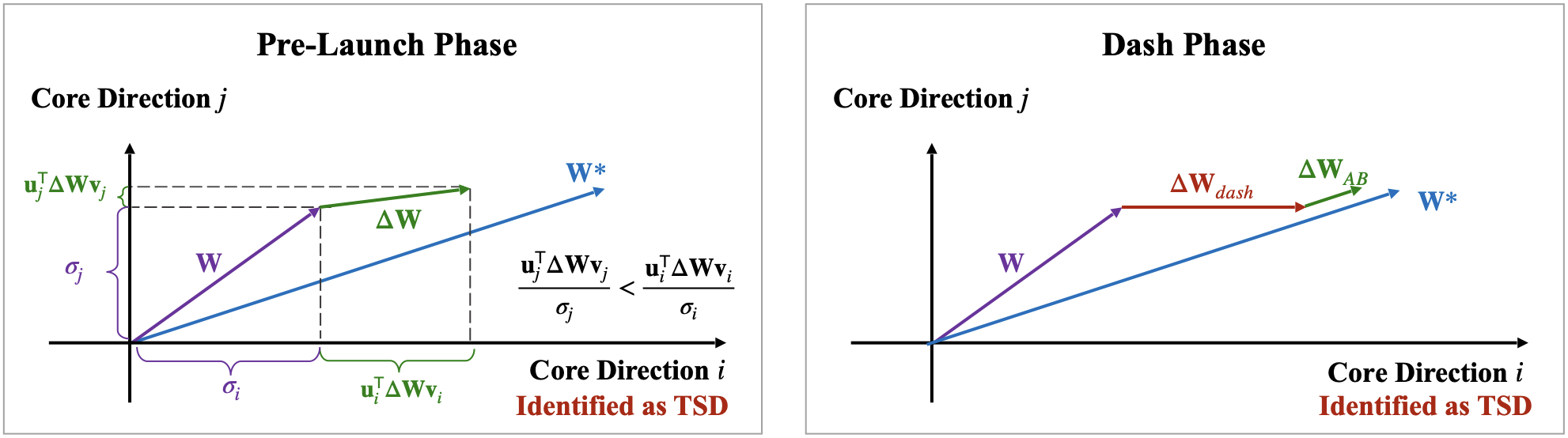

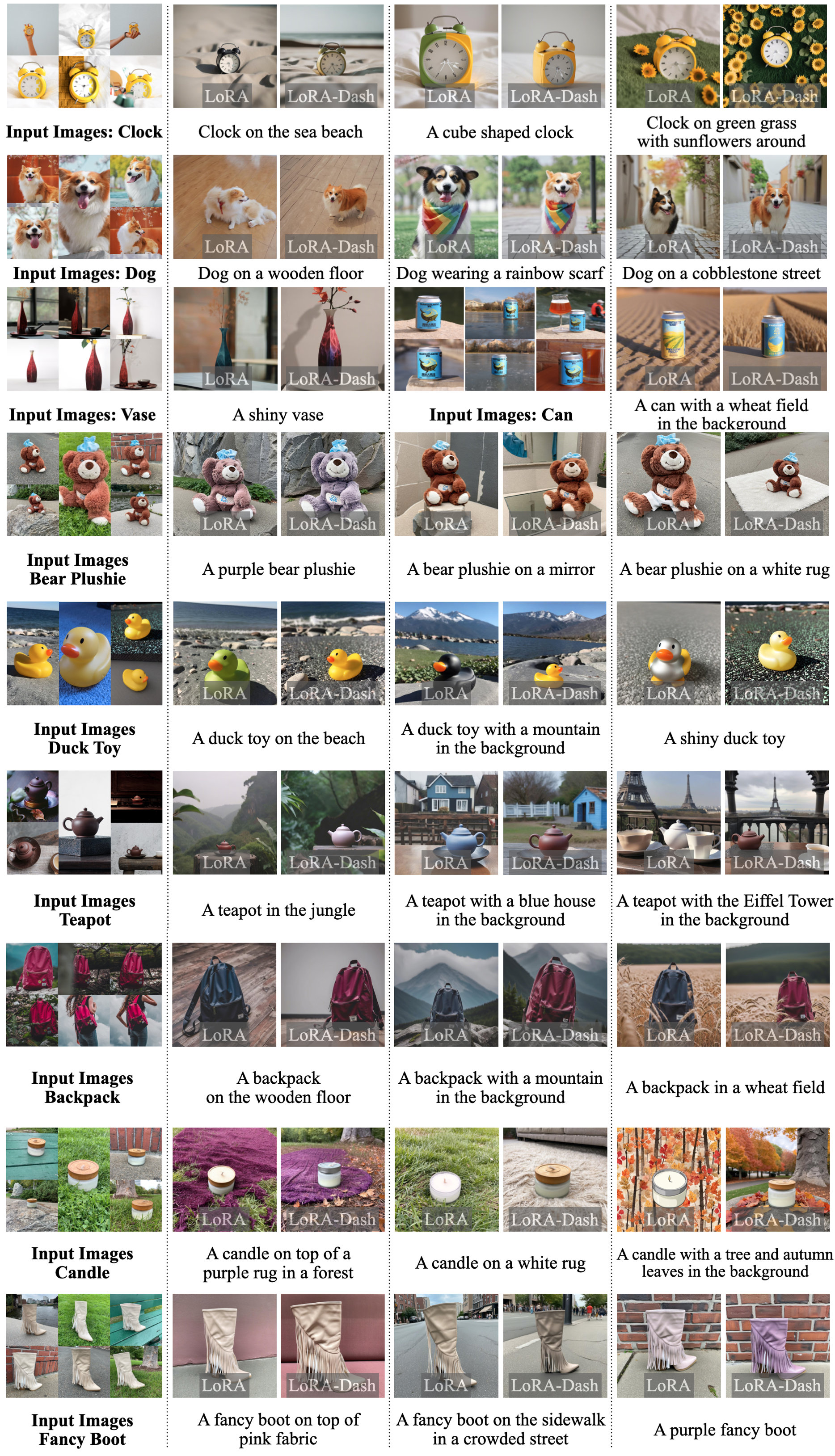

LoRA-Dash comprises two phases: the pre-launch phase for identifying TSD, and the dash phase to maximize their potential.

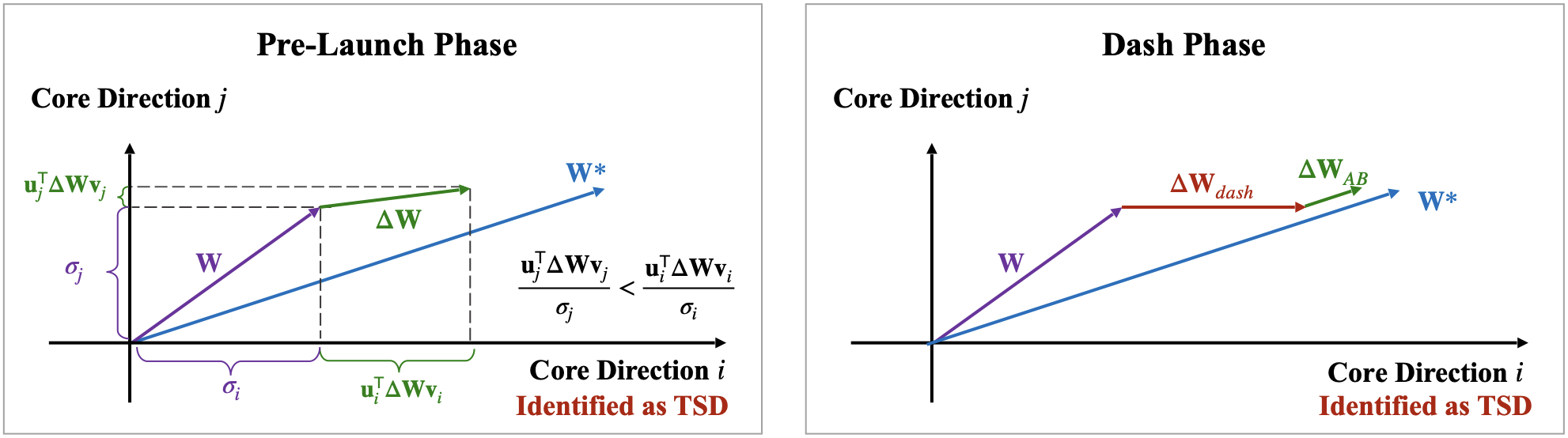

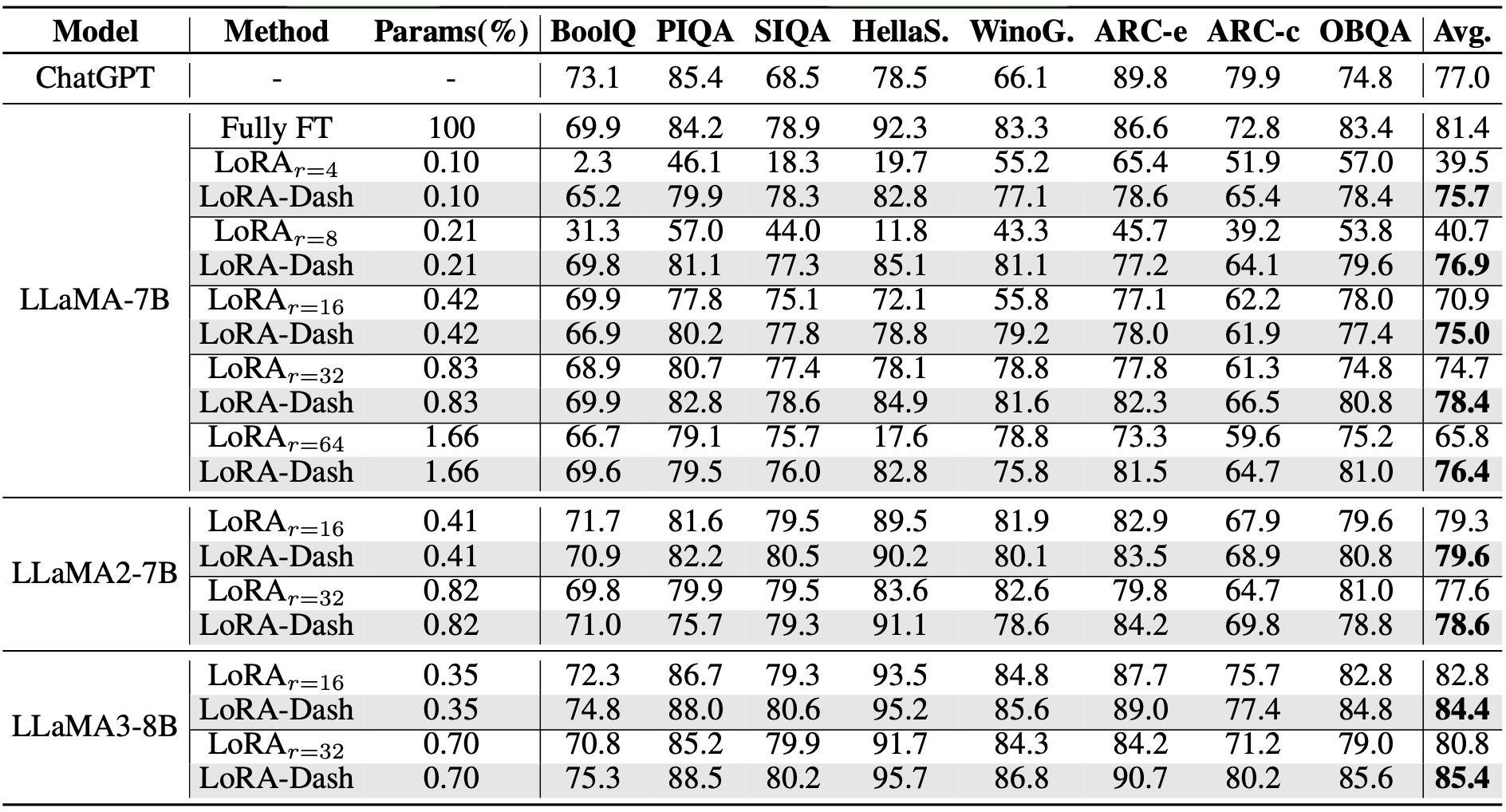

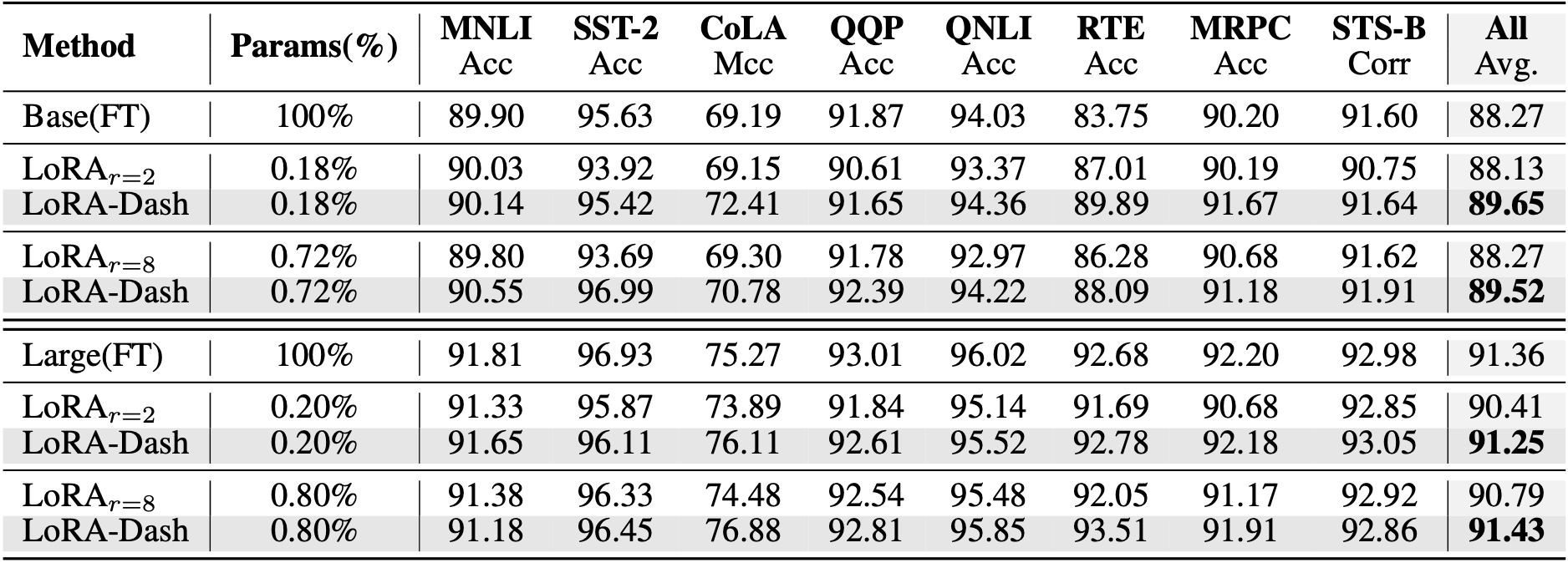

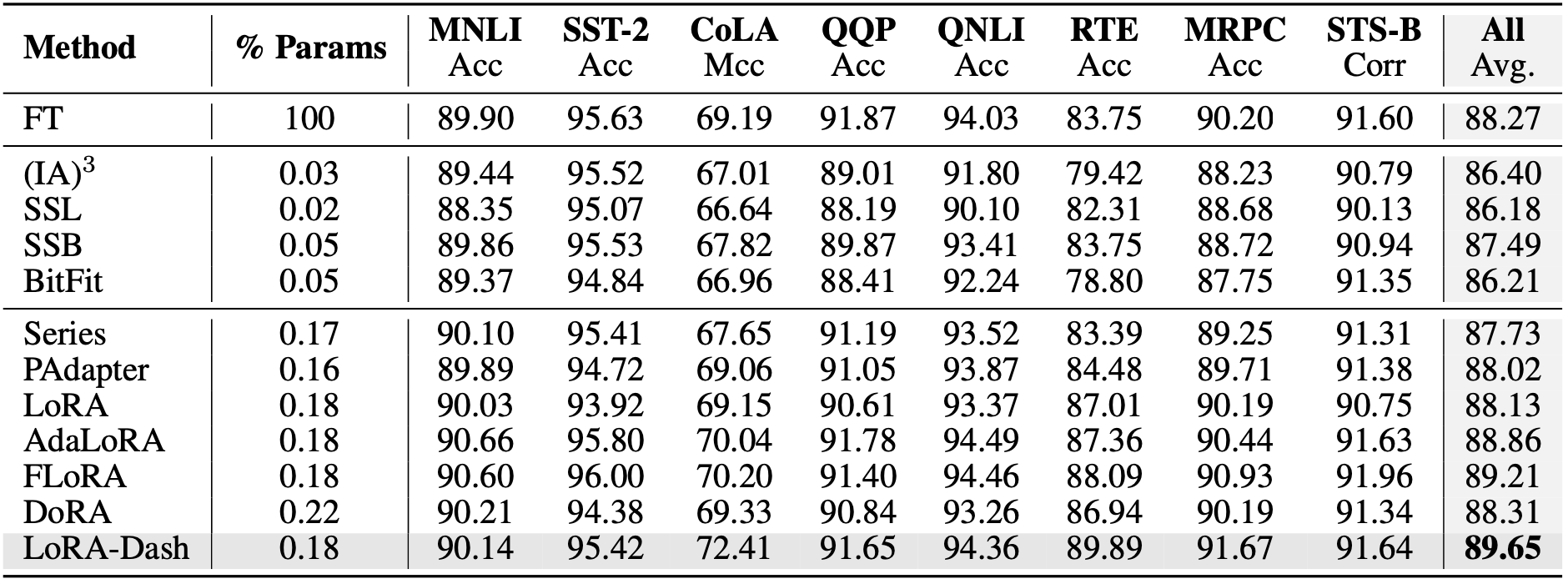

Large language models demonstrate impressive performance on downstream tasks, yet requiring extensive resource consumption when fully fine-tuning all parameters. To mitigate this, Parameter Efficient Fine-Tuning (PEFT) strategies, such as LoRA, have been developed. In this paper, we delve into the concept of task-specific directions—critical for transitioning large models from pretrained states to task-specific enhancements in PEFT. We propose a framework to clearly define these directions and explore their properties, and practical utilization challenges. We then introduce a novel approach, LoRA-Dash, which aims to maximize the impact of task-specific directions during the fine-tuning process, thereby enhancing model performance on targeted tasks. Extensive experiments have conclusively demonstrated the effectiveness of LoRA-Dash, and in-depth analyses further reveal the underlying mechanisms of LoRA-Dash.

LoRA-Dash comprises two phases: the pre-launch phase for identifying TSD, and the dash phase to maximize their potential.

@article{si2024unleashing,

title={Unleashing the Power of Task-Specific Directions in Parameter Efficient Fine-tuning},

author={Si, Chongjie and Shi, Zhiyi and Zhang, Shifan and Yang, Xiaokang and Pfister, Hanspeter and Shen, Wei},

journal={arXiv preprint arXiv:2409.01035},

year={2024}

}